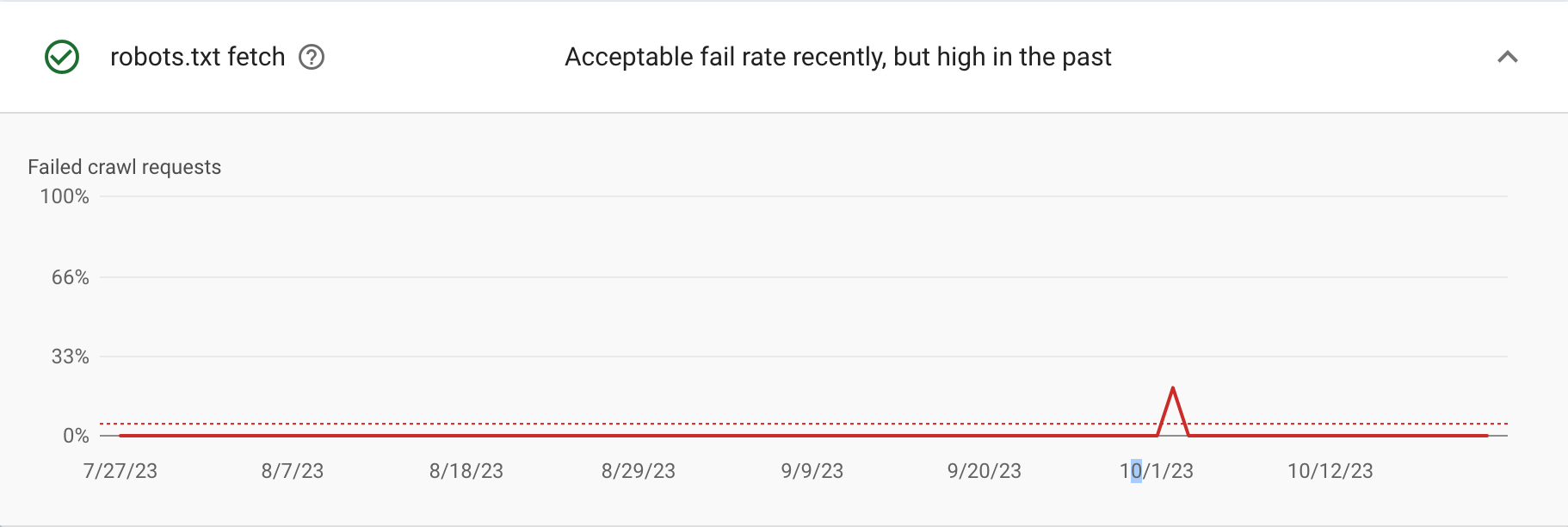

A very popular WordPress seo tool actually will be causing harm to your website. Why is this causing harm you say? Well the real reason isn’t entirely yoasts fault they wrote a plugin that creates a virtual file and it loads in from the database to be seen as a file except that is where this all goes wrong.

The problem per say is how bots are needy to find the robots.txt file and can cause search glitches. As most users know the robots.txt file is on your server main folder and tells bots how to crawl your site what they can access and what they can’t. This causes great confusion for small sites what should I have indexed and what should I not.

You have to determine this if you manager or own a website, typically you don’t want a bot to go to the ‘/wp-admin/’ area to leak any files there that are mostly cms related. Other things to consider are to not allow bots to search your website as they will go and try ‘.com/?s=search%20term’ this will typically bring back a bunch of results based on the term blocking them from this keeps them on the natural website path and will not expose any unwanted data.

Lastly you should tell bots that you have a sitemap for the actual pages on your website which will help them crawl all the good pages that are available. You can also tell them that several bots should be blocked for crawling back pages, and certain bots might be over requesting your site so they should slow down. Most site manage that from the firewall, but you can also apply some of this to the robots.txt file for a public view.

So how do you fix this with a Yoast website typically just making a robots.txt file will override the virtual file and point to the actual static file this will not bounce with 500 errors and allow your site to process as many bots as possible.